So You Want to Use AI for Sentiment Analysis?

A practical guide to what works, what doesn't, and why most off-the-shelf solutions fail on financial text. Learn why fine-tuned models trained on news, X/Twitter, and Reddit outperform generic LLMs by 26%.

Everyone's building with AI now. Plug in an API, write a prompt, ship it. Easy.

Except when it isn't.

If you've tried using ChatGPT or Claude to analyze market sentiment at scale, you've probably noticed something: the results are... inconsistent. Sometimes brilliant. Sometimes confidently wrong. And when you're making investment decisions, "sometimes wrong" isn't a feature—it's a portfolio risk.

We've spent the better part of a year wrestling with this problem at Compass AI. Here's what we've learned about sentiment analysis in the age of large language models—and why the obvious approaches often fail.

The Prompt Engineering Trap

The first thing everyone tries is prompt engineering. It's intuitive: just tell the AI what you want.

Analyze the sentiment of this headline about Apple stock.

Return BULLISH, BEARISH, or NEUTRAL.

Simple. Clean. And roughly 60-65% accurate on financial news.

That sounds okay until you realize a coin flip gets you 50% on a binary classification. You're barely beating random chance.

The problem isn't the model's intelligence—it's context. Financial language is deeply idiomatic. "Apple crushed earnings" is bullish. "Apple crushed the competition" is bullish. "Apple was crushed by supply chain issues" is bearish. "Analysts crushed their price targets" could go either way depending on direction.

Generic LLMs trained on internet text understand language. They don't understand markets.

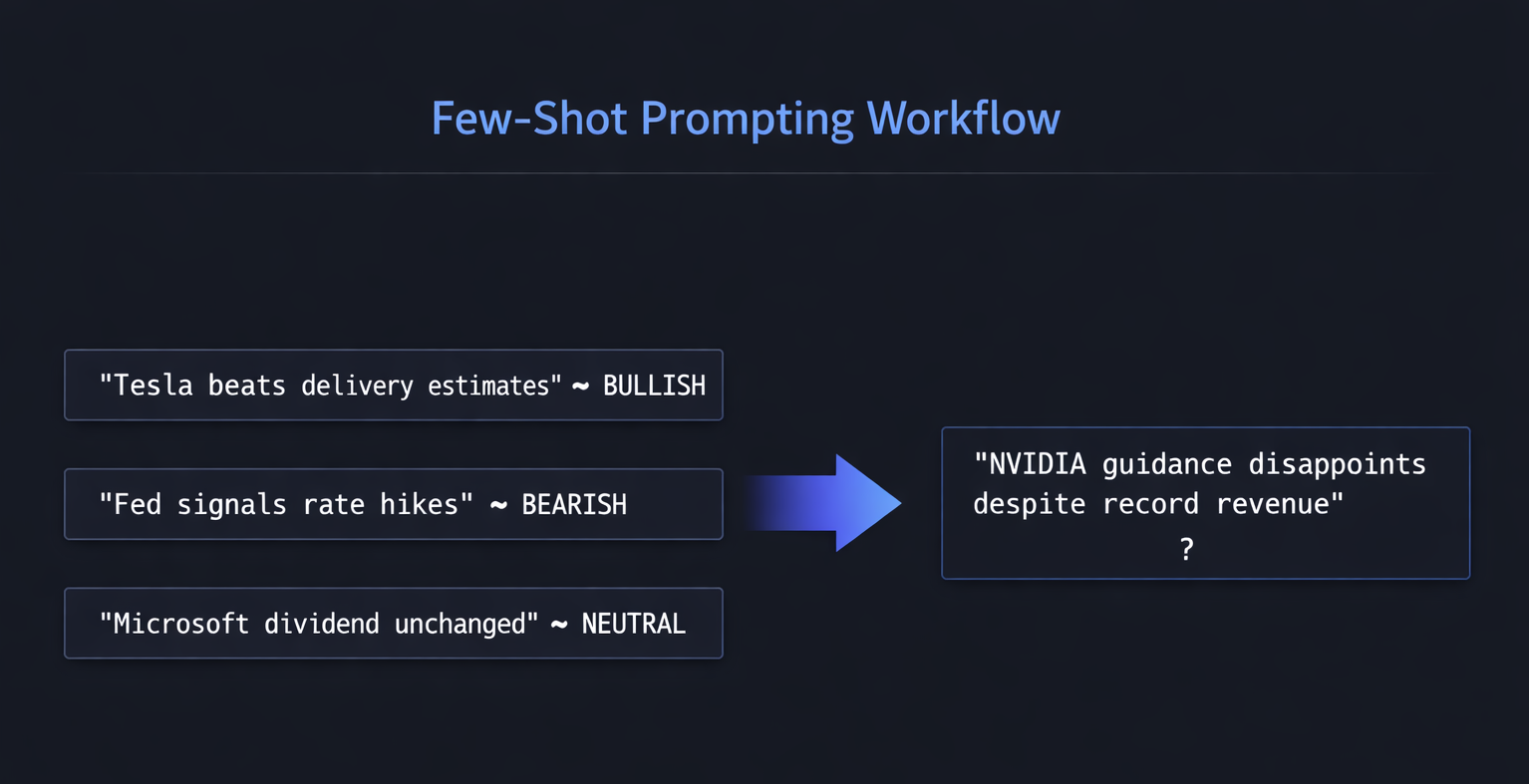

Few-Shot Learning: Better, But Brittle

The next level up is few-shot prompting—giving the model examples before asking it to classify new text.

Example 1: "Tesla beats delivery estimates" → BULLISH

Example 2: "Fed signals more rate hikes ahead" → BEARISH

Example 3: "Microsoft announces dividend unchanged" → NEUTRAL

Now classify: "NVIDIA guidance disappoints despite record revenue"

This helps. You're teaching the model your classification logic through examples. Accuracy jumps to 70-75% on clean headlines.

But here's where it breaks down:

Example selection bias. The examples you choose shape the output. Pick examples that are too obvious, and the model struggles with nuance. Pick examples that are too complex, and it overfits to edge cases.

Context window limits. You can only fit so many examples before you're burning tokens that could be used for actual analysis. At scale, this gets expensive fast.

Domain drift. Examples from tech earnings don't transfer well to biotech FDA decisions or energy sector geopolitics. You end up maintaining dozens of example sets—or accepting degraded performance.

Few-shot is a solid technique for prototyping. It's not a production-grade solution for financial sentiment.

Batch Prompting: Scaling the Wrong Thing

When you need to process thousands of headlines per day, batch prompting seems attractive. Send multiple items in a single request, reduce API calls, save money.

Classify sentiment for each headline:

1. "Amazon expands same-day delivery"

2. "Boeing faces new 737 MAX concerns"

3. "JPMorgan raises dividend 10%"

4. "Pfizer cuts full-year guidance"

...

The efficiency gain is real. The accuracy loss is also real.

LLMs exhibit what researchers call "position bias" in batch classification—items earlier in the list often get more careful attention than items later. We've measured 8-12% accuracy degradation on items 15+ in a batch compared to items 1-5.

There's also cross-contamination. Sentiment from one headline can "bleed" into the model's assessment of adjacent headlines, especially when they're about related topics or the same sector.

Batch prompting works for low-stakes applications. For financial analysis where the whole point is precision, it introduces noise you can't afford.

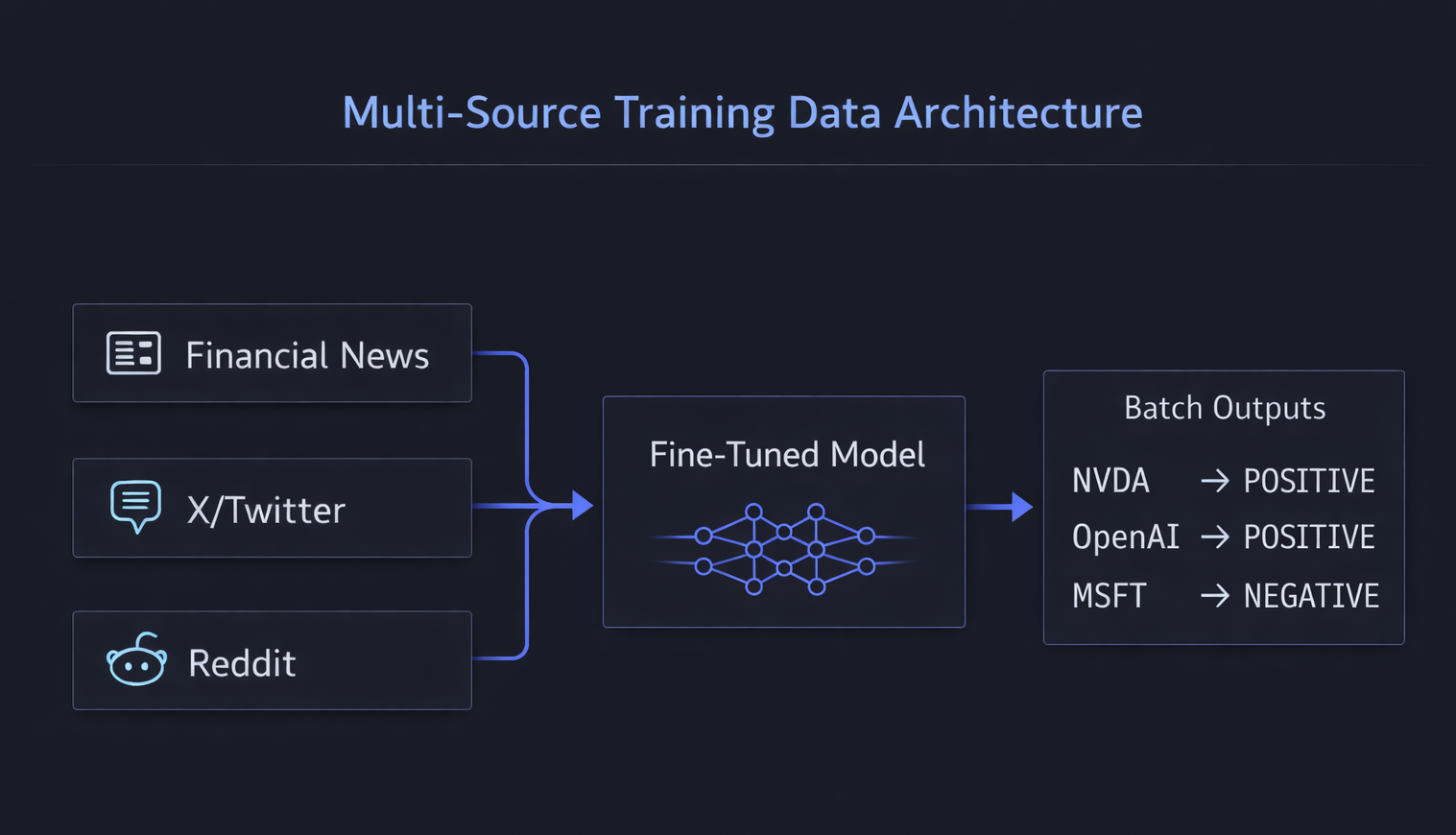

The Fine-Tuning Difference

Here's what actually moves the needle: fine-tuning models on domain-specific data.

Not prompt tricks. Not clever example selection. Training the model itself to understand financial language as financial language.

At Compass, we've fine-tuned our sentiment models on hundreds of thousands of labeled examples:

- Financial news headlines and articles across major outlets (Bloomberg, Reuters, WSJ, CNBC)

- X/Twitter posts from market influencers, analysts, and trading communities

- Reddit discussions from investing communities (r/stocks, r/wallstreetbets, r/investing)

- Earnings call transcripts

- SEC filings

- Analyst reports

The labeling isn't just BULLISH/BEARISH/NEUTRAL. We capture intensity (strong vs. weak signal), confidence (certain vs. speculative language), and temporal orientation (backward-looking report vs. forward-looking guidance).

The result: 88% accuracy on financial sentiment classification.

That's not a cherry-picked benchmark. That's measured against held-out test sets across multiple market sectors, news sources, and time periods.

For comparison on our multi-source test set:

- Zero-shot GPT-4: ~62% (research shows 54-68% on financial benchmarks)

- Few-shot GPT-4 (optimized examples): ~74%

- FinBERT (open-source financial sentiment model): ~82% (performs better on formal news, struggles with social media vernacular)

- Compass fine-tuned models: ~88%

The gap between 74% and 88% might look incremental. In practice, it's the difference between a signal you can trade on and noise you have to second-guess.

Why Multi-Source Training Data Matters

A note on training data, because this matters more than most people realize.

Traditional financial NLP models are trained on formal text: news articles, SEC filings, analyst reports. Clean, grammatical, professional.

But market sentiment doesn't live exclusively in 10-Ks and Bloomberg terminals. It lives across multiple channels—financial news, X/Twitter threads, Reddit discussions, Discord servers, and YouTube comments. Each source has distinct linguistic patterns:

Financial News:

- "Apple's guidance disappoints despite earnings beat" (nuanced, requires parsing qualifiers)

- Professional language with implicit context assumptions

X/Twitter:

- "$NVDA absolutely cooked after this guidance" (bearish, informal)

- Real-time reactions with ticker cashtags and trading slang

- "Inverse Cramer never fails" (ironic, requires cultural context)

Reddit:

- "Diamond hands on this dip, not selling" (bullish, defiant)

- "This DD is garbage, OP is a bagholder" (dismissive of bullish thesis)

- Community validation through upvotes and awards

Models trained only on formal financial text misread social media discourse entirely. They lack the cultural context to interpret how retail investors actually communicate about markets.

We trained across all three sources—not just the text, but the engagement signals (retweets, likes, upvotes, awards) as validation layers. When a sentiment trend appears simultaneously in news coverage, X discussions, and Reddit threads, that convergence carries stronger signal than any single source alone.

What This Means for You

If you're building sentiment analysis into your workflow, here's the honest assessment:

For quick prototyping: Few-shot prompting with GPT-4 or Claude is fine. You'll get directionally useful results without infrastructure investment.

For one-off analysis: Zero-shot with a good prompt works. Ask the model to explain its reasoning, not just classify. The explanation often reveals whether the classification is trustworthy.

For production systems at scale: You need fine-tuned models. The accuracy gap compounds over thousands of classifications. A 15% error rate on individual headlines becomes systematic bias in aggregate signals.

For retail investor sentiment specifically: You need models trained on how retail investors actually communicate—not just how analysts write.

Sentiment as a Foundation, Not the Destination

At Compass, sentiment analysis isn't the product—it's the foundation that powers everything else.

We use our fine-tuned sentiment models to:

- Annotate news in real-time - every headline gets a sentiment score so you can filter signal from noise

- Compute aggregate metrics - track how sentiment is shifting across news, social media, and Reddit for any stock

- Power AI-driven insights - sentiment feeds into our AI stock summaries, market analysis, and narrative detection

But sentiment scoring is just the starting point. The real value comes from what we build on top:

Real-time dashboards that surface which stocks are seeing sentiment spikes before they hit mainstream awareness.

AI-powered deep summaries that synthesize bullish and bearish perspectives—not generic LLM fluff, but structured analysis of what the optimists are weighing versus what the pessimists see.

Narrative processing that identifies emerging market stories and tracks how they evolve across news cycles and social platforms.

Sentiment analysis is a means to an end. The end is helping you make better decisions with better information.

That's what we're building toward.

Compass AI is currently in beta. If you want early access to AI-powered stock sentiment, news clustering, and bull/bear case analysis, check out compassaihq.com.

Ready to experience intelligent investing?

Join Compass AI and start making data-driven investment decisions today.

Get Started for Free